Administrative data for HAI surveillance: fuhgeddaboudit!

There's a new paper in Clinical Infectious Diseases by Eli and company that is the definitive treatise on the use of administrative claims data for healthcare associated infection surveillance. I have never been a fan of using coded data for this purpose for two reasons: (1) it shifts the important work of surveillance from trained infection preventionists to medical records abstractors who are not trained in surveillance methodology and who are limited by their ability to only review notes written by physicians; and (2) while it's a quick, cheap and dirty method, as Eli's group proves, it's wildly inaccurate. The poor performance of coded data should really be not too surprising since these codes were developed for billing purposes not epidemiologic surveillance.

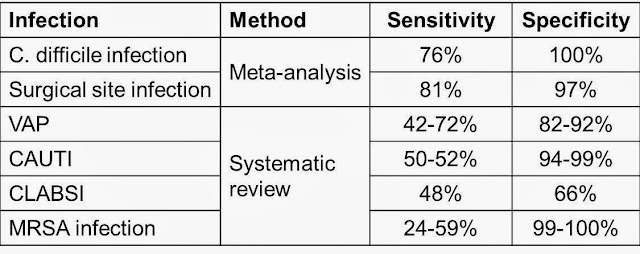

The authors performed a systematic review of the literature and found 19 papers for analysis. In each paper coded data was compared to either microbiologic data (C. difficile and MRSA) or manual chart review using standardized case definitions (SSI, VAP, CAUTI, CLABSI). Meta-analysis was performed when enough studies were available. Results are summarized in the table below.

As can be seen in the table, specificity of coded data is generally good to excellent, while sensitivity ranges from bad to horrible. I should mention there is a brand new study looking at CLABSI in the American Journal of Medical Quality, which found a sensitivity of 33% and specificity of 99%.

Perhaps the forthcoming ICD-10 will help, but the fundamental issue of only reviewing physician notes will remain. More sophisticated methods utilizing computerized algorithms for analyzing electronic medical records for case detection will probably be the ultimate solution.

The authors performed a systematic review of the literature and found 19 papers for analysis. In each paper coded data was compared to either microbiologic data (C. difficile and MRSA) or manual chart review using standardized case definitions (SSI, VAP, CAUTI, CLABSI). Meta-analysis was performed when enough studies were available. Results are summarized in the table below.

As can be seen in the table, specificity of coded data is generally good to excellent, while sensitivity ranges from bad to horrible. I should mention there is a brand new study looking at CLABSI in the American Journal of Medical Quality, which found a sensitivity of 33% and specificity of 99%.

Perhaps the forthcoming ICD-10 will help, but the fundamental issue of only reviewing physician notes will remain. More sophisticated methods utilizing computerized algorithms for analyzing electronic medical records for case detection will probably be the ultimate solution.

Great post!

ReplyDelete